The SEMIC Core Vocabularies Handbook

- 1. Introduction

- 2. Creating a new data model from an existing Core Vocabulary

- 2.1. Create a new XSD schema from a Core Vocabulary (UC1.1)

- 2.2. Create a new JSON-LD context definition from a Core Vocabulary (UC1.2)

- 3. Mapping an existing model to Core Vocabularies

- 3.1. Map an existing conceptual model to a Core Vocabulary (UC2.1)

- 3.1.1. Use case description

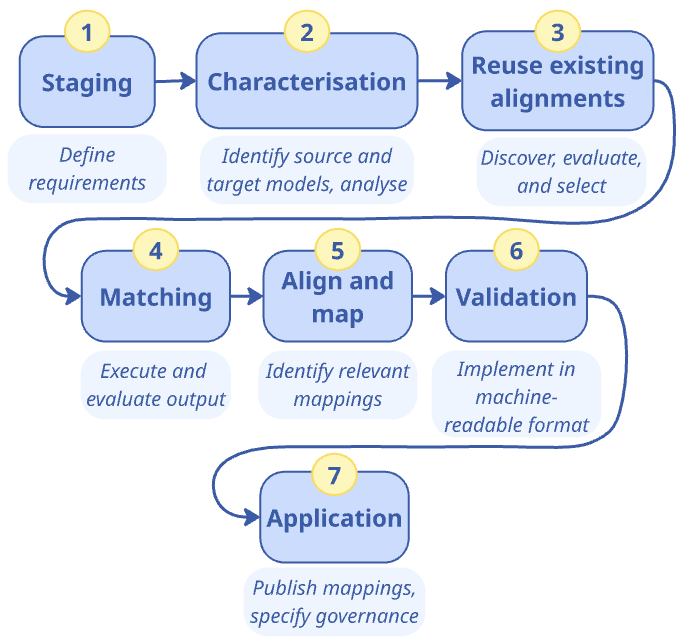

- 3.1.2. Guidelines on how to map an existing conceptual model to a Core Vocabulary

- 3.1.3. Tutorial: Map Schema.org to the Core Business Vocabulary

- 3.1.3.1. Phase 1: Staging (Defining the requirements)

- 3.1.3.2. Phase 2: Characterisation (Defining source and target models)

- 3.1.3.3. Phase 3: Reuse of existing mappings

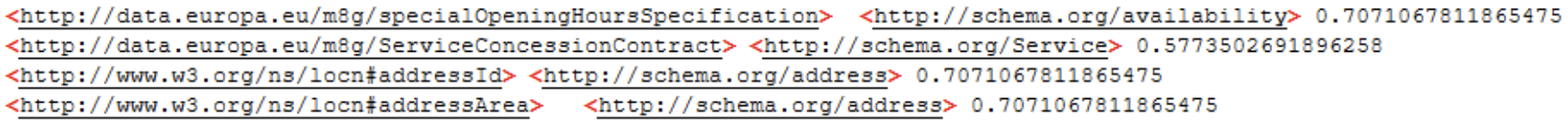

- 3.1.3.4. Phase 4: Matching (execute and filter matching candidates)

- 3.1.3.5. Phase 5: Validate alignments

- 3.1.3.6. Phase 6: Application (operationalise the mappings)

- 3.2. Map an existing XSD Schema to a Core Vocabulary (UC2.2)

- 3.1. Map an existing conceptual model to a Core Vocabulary (UC2.1)

- 4. Concluding remarks

- Appendix: Additional Use Cases

- Glossary

- References

1. Introduction

This Handbook explains the role of Core Vocabularies in enabling semantic interoperability at the EU level and provides a practical guide for public administrations to use them. It is intended for business users who wish to understand how the Core Vocabularies can be useful, and for semantic engineers who seek straightforward guidance for specific use cases.

For first-time readers of this Handbook, we recommend starting with the remainder of this section, where interoperability is introduced, the role of Core Vocabularies is explained, and the important use cases are summarised.

Readers familiar with the SEMIC Core Vocabularies and seeking practical guidance are advised to go directly to the main part of the Handbook, which describes use cases, methodology recommendations, and tutorials for:

-

creating new semantic data specifications or stand-alone data models by using Core Vocabularies, and

-

mapping existing data models to Core Vocabularies.

1.1. Intended audience

This handbook is intended for two main audiences: 1) administrative professionals, including policy officers and possibly also legal experts, and 2) technical experts and IT professionals. Public administrations involve both legal/administrative experts and technical professionals. While they may not always “speak the same language”, they must work together to ensure smooth digital transformation. Semantic interoperability provides the common foundation that allows them to bridge their disciplinary differences and find common ground, enabling effective collaboration and thereby contributing to improved public services. Each intended audience will gain new insights relevant for their respective roles.

Administrative Professionals & Legal Experts

By reading this handbook, domain experts will:

-

Understand the role of semantic interoperability at the EU level, which might also be of use at the national, regional, and local level.

-

Gain insight about how structured data and shared vocabularies enhance legal clarity, data exchange, and cross-border cooperation.

-

Gain insights into how interoperability supports public services and reduces administrative burdens.

It is expected that this will facilitate coordination with technical teams to ensure that interoperability initiatives meet both legal and operational requirements and assist the administrative professionals and legal experts in making informed decisions prioritising IT projects that align with interoperability goals.

Technical Experts & IT Professionals

By engaging with this handbook as both a reference manual and a practical guide, the technical experts and IT professionals who design, implement, and maintain the software ecosystem will:

-

Learn how to design and implement interoperable systems using the Core Vocabularies and semantic data models.

-

Understand methodologies for creating, mapping, and integrating semantic data models in their systems.

-

Be able to apply best practices for data exchange, ensuring consistency and accuracy across different systems.

-

Use standardised approaches to enhance data accessibility, transparency, and reuse in line with FAIR principles [fair].

-

Ensure compliance with the SEMIC Style Guide rules & principles [sem-sg].

It is expected that this will not only facilitate communication with the domain experts, but also further streamline software development conformant to the user specification and, ultimately, the citizens who benefit from more smoothly functioning digital services.

1.2. Structure of the Handbook

The Handbook has two types of content:

-

Explanatory Sections: Intended for administrative professionals and legal experts. They explain interoperability, the role of Core Vocabularies, and describe relevant use cases. It helps non-technical stakeholders understand why semantic interoperability matters and how it supports policy implementation. Each use case is also accompanied by a business case scenario and a user story.

-

Practical Guidance Sections: Designed for technical experts, data architects, and IT professionals, which provide methodologies and step-by-step tutorials for adopting and implementing Core Vocabularies. It includes instructions on creating new semantic data specifications by extending Core Vocabularies, mapping existing data models to them, and ensuring interoperability through standardised practices.

The structure of the main part of the Handbook is as follows. First, the notion of interoperability and the principal use cases will be introduced, which feature the most common, challenging, and interesting scenarios. This is followed by two key chapters, on creating new models and on mapping existing models, which describe and illustrate the principal use cases. For each use case, there is a description intended for administrative professionals and legal experts, guidelines for implementation that describe procedures how to accomplish the goal of the use case, which are then demonstrated in the tutorial for the use case. The guidelines and tutorials are aimed at the technical experts.

Finally, the appendix contains a glossary of terms, additional use cases not covered in this handbook, and the references.

1.3. Interoperability and Core Vocabularies

This section introduces what interoperability is, what makes it semantic, and how Core Vocabularies–which is where key concepts are specified in human-readable and machine-processable formats–contribute to it.

Sharing data easily is indispensable for effective and efficient public services. Sharing may be done through offering one point of access, and often involves reusing the data in multiple applications across multiple departments and organisations. To make this work, the information systems need to be interoperable.

1.3.1. What is interoperability?

Following the European Interoperability Framework (EIF) [eif], interoperability is defined as “the ability of organisations to interact towards mutually beneficial goals, involving the sharing of information and knowledge between these organisations, through the business processes they support, by means of the exchange of data between their ICT systems”, where, for the purpose of EIF, ‘organisations’ refers to “public administration units or any entity acting on their behalf, or EU institutions or bodies”. It is what “enables administrations to cooperate and make public services function across borders, across sectors and across organisational boundaries”, including enabling “public sector actors to connect, cooperate and exchange data while safeguarding sovereignty and subsidiarity”, as described in the EC communication accompanying the Interoperable Europe Act [int-eu].

Existing acts and frameworks related to interoperability

The European Union has formulated Acts, regulations, and frameworks that intend to foster achieving that, including the Data Act [data-act], Data Governance Act [dga], the European Interoperability Framework (EIF) [eif], and the Interoperable Europe Act [iea24], which underscore the importance of harmonised data practices across member states. These Acts and frameworks emphasise that true interoperability goes far beyond just connecting systems at a technical level.

The EU Data Act is a legislative framework aimed at enhancing the EU’s data economy by improving access to data for individuals and businesses. It entered into force on January 11, 2024, and is designed to ensure fairness in data allocation and encourage data-driven innovation.

The Data Governance Act (DGA) is a regulation by the European Union aimed at facilitating data sharing and increasing trust in data usage. It establishes a framework for the reuse of publicly held data and encourages the sharing of data for altruistic purposes, while also regulating data intermediaries to enhance data availability and overcome technical barriers. The act is part of the broader European strategy for data, which seeks to create a more integrated and efficient data economy.

The EIF provides specific guidance on how to set up interoperable digital public services. It gives guidance, through a set of recommendations, to public administrations on how to improve governance of their interoperability activities, establish cross-organisational relationships, streamline processes supporting end-to-end digital services, and ensure that existing and new legislation do not compromise interoperability efforts.

The Interoperable Europe Act, which entered into force on 11 April 2024, aims to enhance cross-border interoperability and cooperation in the public sector across the EU. It is designed to support the objectives of the Digital Decade, ensuring that 100% of key public services are available online by 2030, including those requiring cross-border data exchange. The Act addresses challenges by creating tools for interoperability within public administrations and removing legal, organizational, and technical obstacles. It envisions an emerging ‘network of networks’ of largely sovereign actors at all levels of government, each with their own legal framework and mandates, yet all interconnected”, i.e., for seamless cross-border cooperation, which is to be supported by mandatory assessments.

1.3.2. What is semantic interoperability and how to achieve it?

While data exchange is an obvious requirement for interoperability, there are fine but crucial distinctions between data format, syntactic, and semantic interoperability. A standardised syntax and data format to store data lets one exchange data, such as creating an SQL database dump in one tool and seamlessly reopening it in another relational database management system, or lets one send and receive emails that arrive properly in each other’s inbox.

Interoperability at the semantic level concerns the meaning of that data. One may have the same format and language to represent data, such as XML, but with a tag <bank> … </bank>, neither the software nor the humans can determine from just that what sort of bank is enclosed within the tags. Such meaning is defined in various artefacts such as vocabularies, thesauri, and ontologies. A <fin:bank> tag in a document may then be an implemented version of its definition at the semantic layer, where the entity has a definition and a number of properties specified in the model (abbreviated with fin), like that the fin:bank is a type of financial organisation with a board of directors and has a location for its headquarters. This enables not only correct sending and receiving of data, but also exchanging data reliably, accessing the right data when querying for information, obtaining relevant data in the query answer, and merging data.

Interoperability thus consists of a semantic component as well, which "refers to the meaning of data elements and the relationship between them" and "includes developing vocabularies and schemata to describe data exchanges" [eif4scc]. According to the EIF, section 3.5 [eif2], semantic interoperability "ensures that the precise format and meaning of exchanged data and information is preserved and understood throughout exchanges between parties, in other words ‘what is sent is what is understood’." One recommended way to achieve semantic interoperability between public administrations is to use semantic assets, such as semantic data models, information models, ontologies, and vocabularies. SEMIC uses models called Core Vocabularies.

1.3.3. What is a Core Vocabulary?

A Core Vocabulary (CV) is a basic, reusable, and extensible specification that captures the relevant characteristics of entities, which can be used to add semantics to data and information in a context-neutral manner [cv-hb]. Its primary purpose is to provide standardised terms that can be reused across various application domains, typically realised as a lightweight ontology (optionally accompanied by a permissive data shape) and documented in a concise specification. Core Vocabularies for SEMIC [sem-sg-cvs] are maintained by the SEMIC action under the Interoperable Europe umbrella of DG DIGIT and are described in the SEMIC Core Vocabularies section below.

1.3.4. SEMIC Core Vocabularies

Since 2011, the European Commission has facilitated international working groups to forge consensus and maintain the SEMIC Core Vocabularies. A short description of these vocabularies is included in the Table below. The latest release of the SEMIC Core Vocabularies can be retrieved via the SEMIC Support Center [semic] or directly from the GitHub repository [semic-gh] in both human- and machine-readable formats. They are published under the CC-BY 4.0 licence [cc-by]. Henceforth, when we use the term Core Vocabularies, we refer to the SEMIC Core Vocabularies specifically.

| Vocabulary | Description |

|---|---|

|

The Core Person Vocabulary (CPV) [cpv] is a simplified, reusable and extensible vocabulary that captures the fundamental characteristics of a person; e.g., the name, gender, date of birth, and location. This specification enables interoperability among registers and any other ICT-based solutions exchanging and processing person-related information. |

|

The Core Business Vocabulary (CBV) [cbv] is a simplified, reusable and extensible vocabulary that captures the fundamental characteristics of a legal entity; e.g., the legal name, activity, and address. The Core Business Vocabulary includes a minimal number of classes and properties modelled to capture the typical details recorded by business registers. It facilitates information exchange between business registers despite differences in what they record and publish. |

|

The Core Location Vocabulary (CLV) [clv] is a simplified, reusable and extensible vocabulary that captures the fundamental characteristics of a location, represented as an address, a geographic name, or a geometry. The Location Core Vocabulary provides a minimum set of classes and properties for describing a location represented as an address, a geographic name, or a geometry. This specification enables interoperability among land registers and any other ICT-based solutions exchanging and processing location information. |

|

The Core Criterion and Core Evidence Vocabulary (CCCEV) [cccev] supports the exchange of information between organisations that define criteria and organisations that respond to these criteria by means of evidence. The CCCEV addresses specific needs of businesses, public administrations and citizens across the EU, including the following use cases:

|

|

The Core Public Organisation Vocabulary (CPOV) [cpov] provides a vocabulary for describing public organisations in the European Union. It addresses specific needs of businesses, public administrations and citizens across the European Union, including the following use cases:

|

|

The Core Public Event Vocabulary (CPEV) [cpov] is a simplified, reusable and extensible vocabulary that captures the fundamental characteristics of a public event, e.g., the title, the date, the location, the organiser etc. It aspires to become a common model for describing public events (conferences, summits, etc.) in the European Union. This specification enables interoperability among registers and any other ICT based solutions exchanging and processing information related to public events. |

|

The Core Public Service Vocabulary Application Profile (CPSV-AP) [CPSV-AP] is a vocabulary for describing public services and the associated life and business events. With the CPSV-AP it is possible to:

|

The Core Vocabularies are semantic data specifications [sem-sg-wsds] that are disseminated as the following artefacts:

-

lightweight ontology [sem-sg-wio] for vocabulary definition expressed in OWL [owl2];

-

loose data shape specification [sem-sg-wds] expressed in [shacl];

-

human-readable reference documentation [sem-sg-wdsd] in HTML (based on ReSpec [respec]);

-

conceptual model specification [sem-sg-wcm] expressed in UML Class Diagram notation [uml].

1.3.5. Why Core Vocabularies?

Modern-day information system design has moved the goalposts, notably that they typically have to operate in an ecosystem of tools and data, which caused a number of new problems, notably:

-

Developers are reinventing the wheel by modelling the same topics over and over again in different organisations, which is a wasteful use of time and other resources.

-

Consequent near-duplications and genuine differences cause, at best, delays in interoperability across systems and, at worst, legally inconsistent data, thereby harming individuals or organisations.

-

A lack of a high-level common vocabulary causes engineers to create many 1:1 low-level technical mappings between resources that are buried in implementations, which become an unmaintainable mesh structure the more new resources are added and the more often the sources are updated.

-

Individual formats of published datasets require more ad hoc Extract-Transform-Load scripts to reuse commonly reused data, such as cadaster data and registries of companies, imposing a higher burden on tool developers to create and maintain the data processing scripts.

Core vocabularies contribute to the solution of all these problems at once thanks to providing agreed-upon shared common building blocks in commonly used domains, such as public services, events, persons and more. Core Vocabularies can be used as, as formulated on the SEMIC Support Centre site:

-

a starting point for designing the conceptual data models and application profiles for newly developed information systems, simply through reuse of the terminology;

-

the basis of a particular data model that is used to exchange data among existing information systems;

-

common model to integrate data originating from disparate data sources thanks to the shared terminology;

-

the foundation for defining a common export format for data and thereby facilitating the development of common import mechanisms.

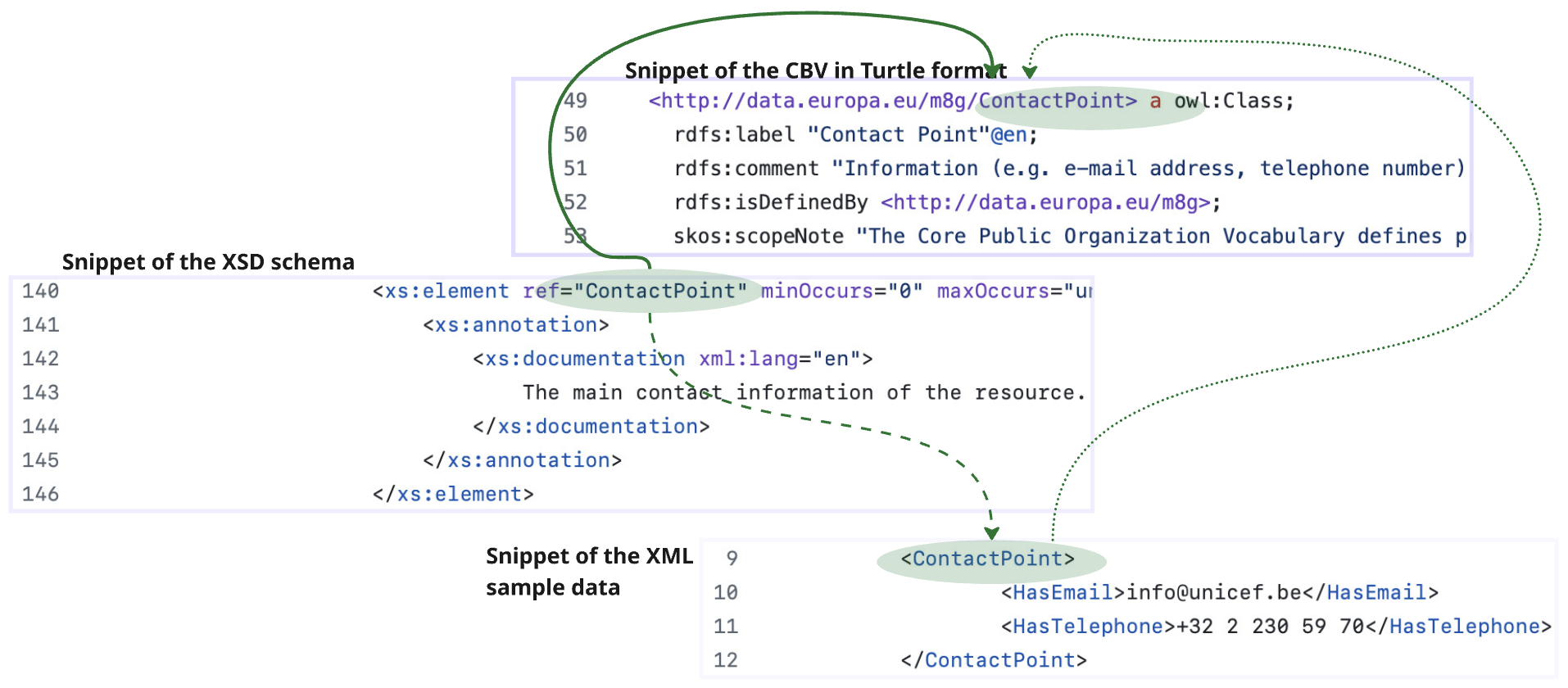

Concretely, let us illustrate the problem versus the solution for languages designed for interoperability, such as XML. The XSD schema and the corresponding XML files that adhere to it help achieve syntactic interoperability for those XML files. It does not achieve interoperability among the XSD schemas, however, because there is no such mechanism to declare that a tag in schema xs1, say, <bank> is the same as <bank> in schema xs2. We need another mechanism to achieve that, which is provided by vocabularies that operate at a higher level of abstraction to which the XSD schemas can be linked: the semantic layer.

Alternatively, one can avoid the interoperability problem by creating multiple XSD schemas from the same vocabulary which then offers semantic interoperability implicitly. When a developer wants to reuse a Core Vocabulary-based XSD, they only can be used as-is or extended into a more specific application profile, but not modified to the extent that it could to contradict the Core Vocabulary, thereby continuing to foster the intended interoperability.

In addition, with Core Vocabularies represented in RDFS or OWL, one can mix vocabularies and link to external concepts, whereas that is not possible with XSD where only types and elements defined in the single schema can be used.

Finally, an added benefit is that while multilingual XSD is possible through manually created tags to store the multilingual information, such features are already part of standardised vocabulary languages used by the Core Vocabularies, such as SKOS, RDF, RDFS, and OWL, thereby offering a standardised approach to multilingual labels, which facilitates software development, reuse, and interoperability.

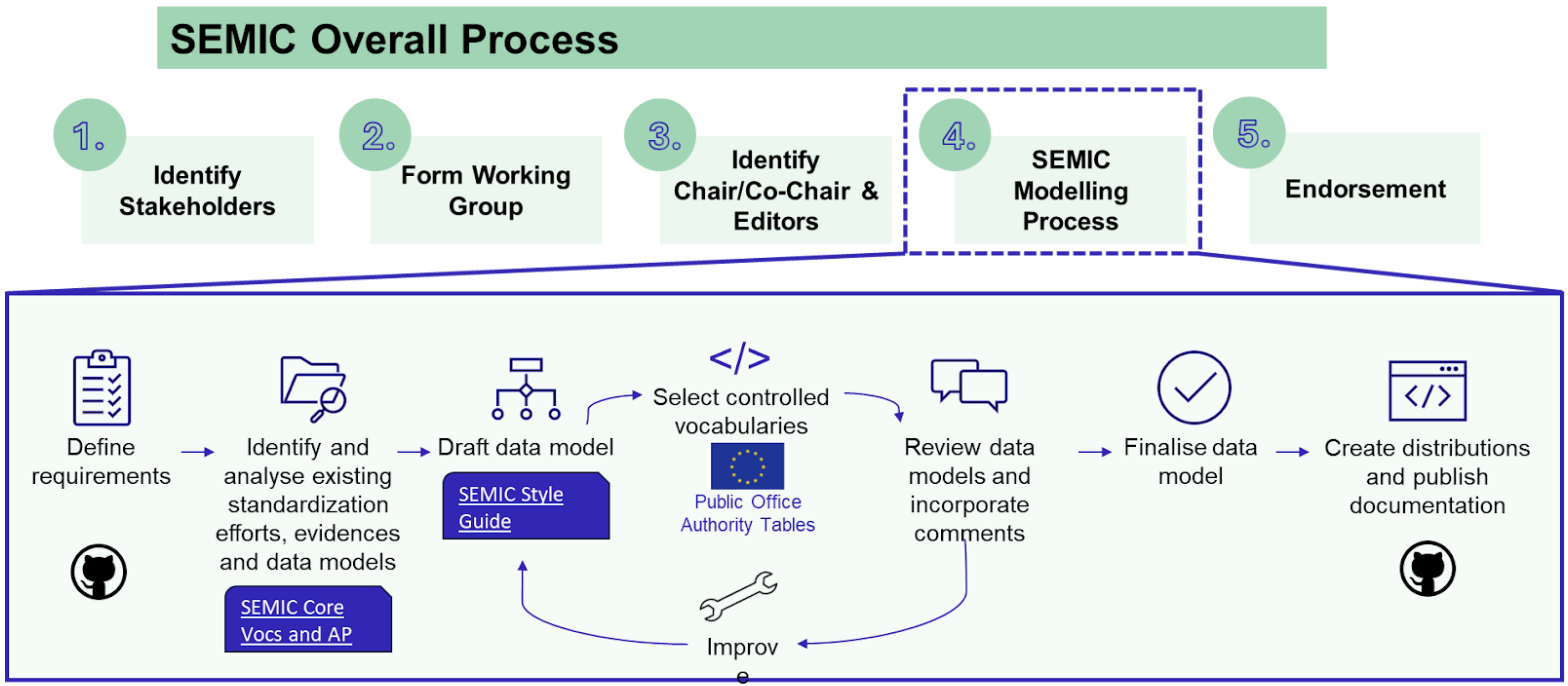

Core Vocabularies lifecycle

The SEMIC Core Vocabularies have been developed following the ‘Process and methodology for developing Core Vocabularies’ [cv-met] of which the most relevant section is depicted in the figure below. Assuming stakeholders and Working Group members with relevant roles are in place, requirements are defined, which are thoroughly assessed on existing standardisation efforts, evidence, and other data models. When a change is deemed necessary, it enters a drafting phase that focuses on the technical details whilst adhering to the SEMIC Style Guide, followed by public consultations. Thereafter, if deemed necessary based on the feedback, it will undergo another modelling iteration, else the changes are refined and the model finalised. The final model is formally approved, implemented, and documented, ensuring they are well-understood and agreed upon by all relevant parties.

The release management of Core Vocabularies follows a structured timeline for all tasks and each release includes detailed documentation to support implementation, so that users can integrate new versions with minimal disruption. This process maintains the quality and relevance of the Core Vocabularies, and supports a dynamic and responsive framework for semantic interoperability within digital public services.

1.4. Overview of use cases for using Core Vocabularies

Let us commence with a practical example that contributes to setting the stage for defining the use cases afterward.

1.4.1. A practical example

Imagine you are starting a business in another EU country. To complete the registration, you need to submit a criminal record certificate and a diploma certificate to multiple public organisations. In many countries, this process is still manual—people must physically visit different ministries, request documents, and submit them in person or via email. Each organisation may use different formats and terminology, making it difficult for institutions to interpret and process the information correctly. This can generate mistakes during data entry due to terminological confusion that subsequently have to be corrected. Without a common reference vocabulary, these organisations interpret the data differently, making seamless exchange impossible.

Now, imagine an alternative scenario, such as indicated by the Once-Only Technical System (OOTS) [oots] for the Single Digital Gateway Regulation [sdgr], where this entire process is fully automated. Instead of individuals having to visit multiple offices, the ministries and public administrations would communicate directly with each other, exchanging the necessary information in a structured and consistent manner. The citizen could simply grant approval to the administration office to fetch the data from their home country that already had recorded the relevant data. This would eliminate the need for data re-submission by citizens (according to the once-only principle) and for duplicate document submissions, as well as avoid possible data entry issues and thereby making evidence verification faster. Overall, achieving this degree of data interoperability can considerably reduce burdens for citizens and public administrations in terms of hassle, costs, and mobility.

Defining the what is one step; the how to achieve it is another.

1.4.2. How to solve the scenario with semantic data specifications

How can different systems and institutions "talk" to each other effectively at the level of software applications and the various types of databases? That technical level uses data models to structure the stored data. This first raises the questions of who is developing those data models, and how, and then how those different data models across the database and applications agree on the terminology used in them. Therefore, the challenge is not only technical but also semantic. It is not enough for systems to simply exchange data—they must also be able to interpret the meaning behind the data in a consistent way such that it will not result in errors or so-called 'dirty' (incorrect or incomplete) data. This requires a common language and a (multilingual) structured vocabulary at both the business process level and the IT systems level.

This is where standards to declare the semantics play a crucial role. By using Core Vocabularies, public administrations can ensure that data and information are structured in a way understood by both humans and machines. Standardised models allow different organisations to recognise and process information without discrepancies, thereby reducing errors and the need for manual intervention. As a result, governments can facilitate seamless data exchange, ensuring that information is accurately expressed, shared, interpreted, and processed across systems, leading to more efficient approvals and interactions for businesses, governmental organisations, and citizens.

These vocabularies can then be used by the IT personnel of the various departments to create their data models, thereby in effect avoiding incompatibility by building interoperability into the systems from the start. But what about existing systems from, e.g., a Ministry of Economic Affairs, a national Chamber of Commerce, and other organisations that are relevant to the Single Digital Gateway? Setting aside existing systems to build a new one may not be reasonable and cost effective, especially when they already have their own entrenched terminologies embedded into them or have already incorporated other models used internationally, such as Schema.org or the Financial Business Industry Ontology. There is a solution to that problem, too: map the specifications at this semantic layer to make the other model interoperable with the CV and then use both through that declared mapping ‘bridge’ between them.

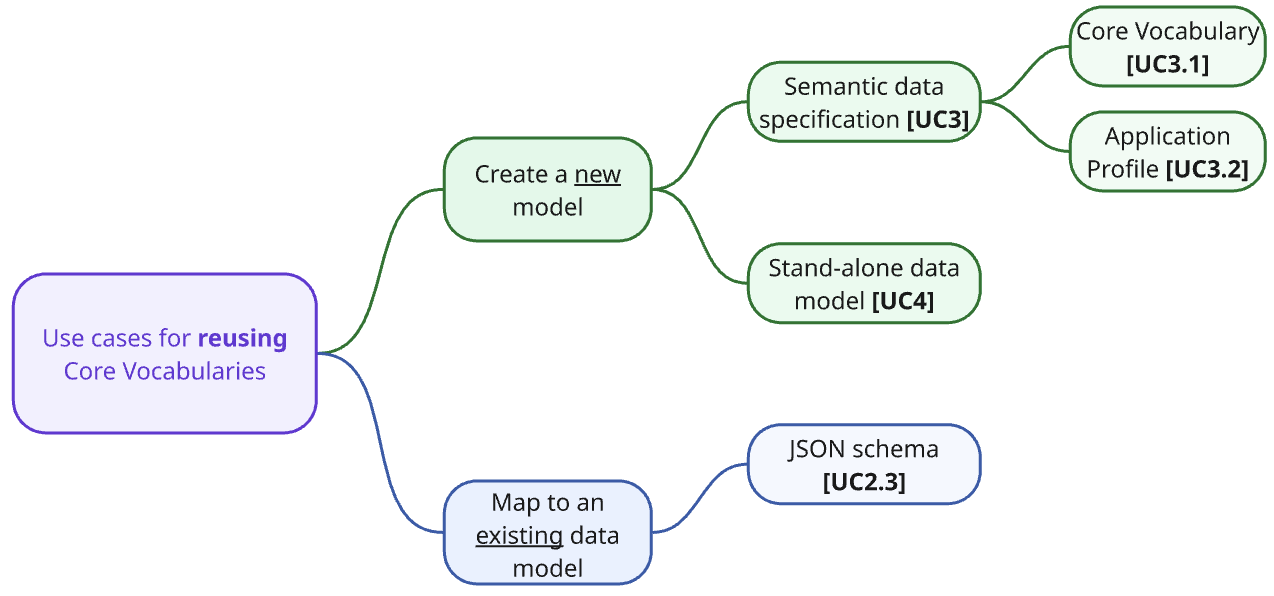

It is these two key ways of using CVs that will be covered in the use cases: creating new data specifications availing of the CVs (possibly adding one’s own additional content to it) and mapping existing schemas.

1.4.3. Introduction to the use cases covered in the handbook

This handbook serves as a practical guide for using Core Vocabularies in various common situations. To provide clear and actionable insights, we have categorised potential use cases into two groups:

-

Primary Business and Technical Use Cases: These are the most common, interesting, and/or challenging scenarios, all thoroughly covered within this handbook.

-

Additional Business and Technical Use Cases: These briefly introduce other relevant scenarios but are not elaborated on in detail in this handbook.

A business case in the current context is to be understood as a narrative user story (in terms of the technical expert terminology), which functions as motivation for the use case. They capture the who, what, and why in a broader context, including who the beneficiary of an action is, what they need, and what the benefit of it is. Such a narrative is then structured into a user story as a structured sentence that captures the essence of the business case yet also communicates genericity. For the technical reader, a use case specification is listed afterwards, which provides a schematic view as a step towards the precise technical specifications.

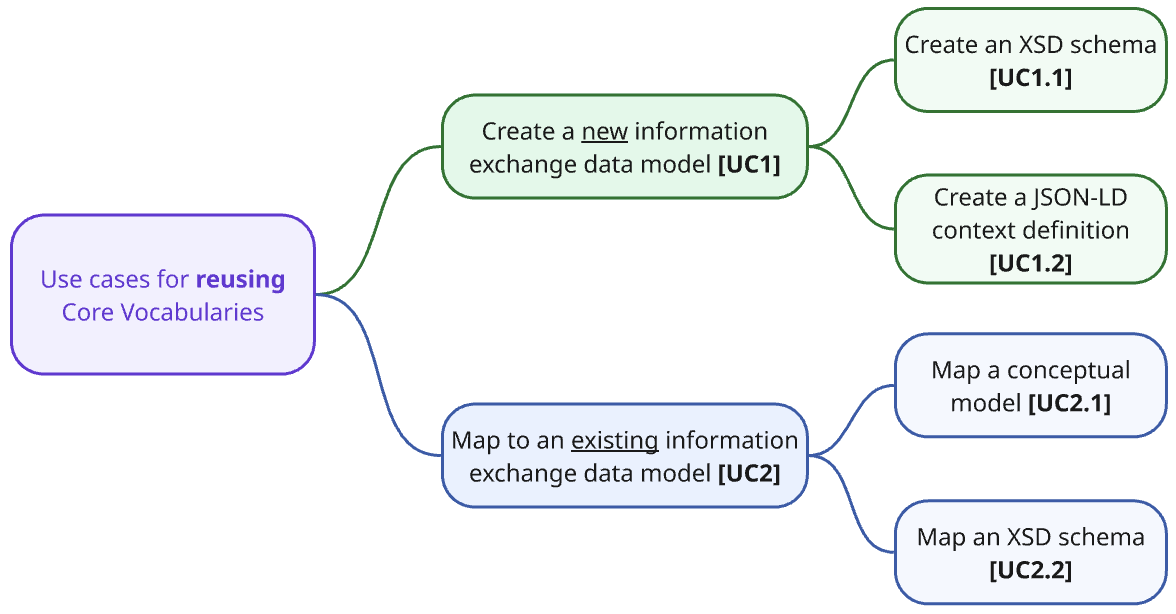

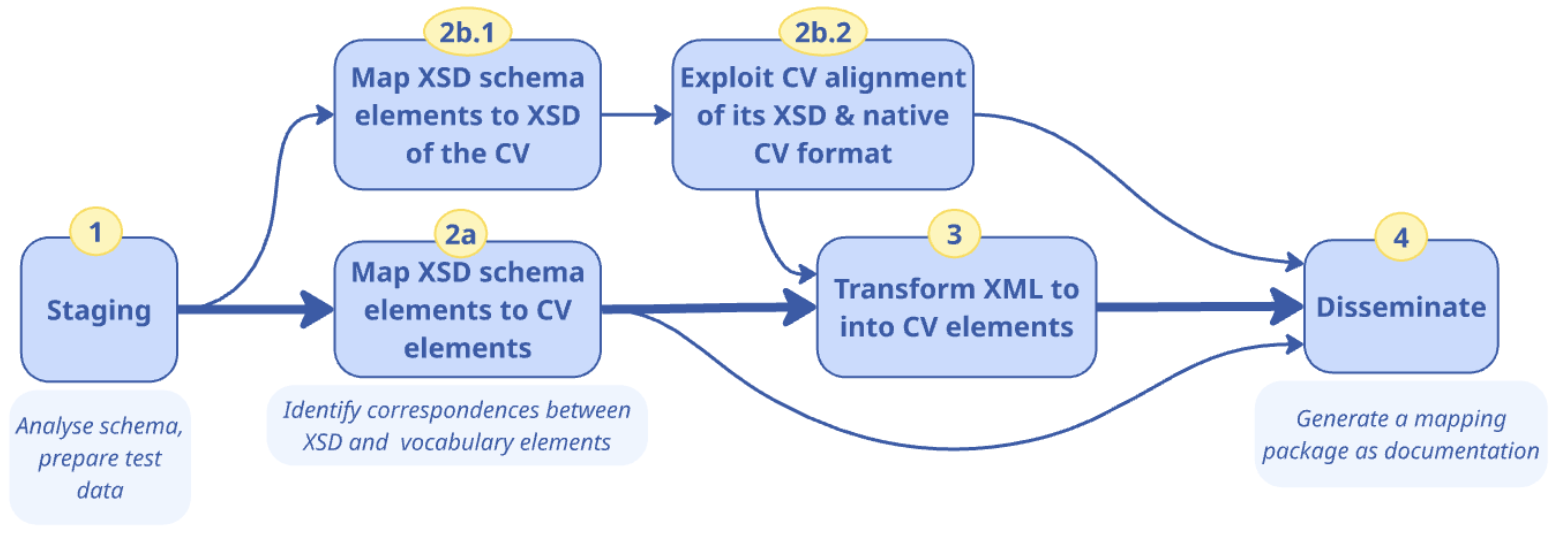

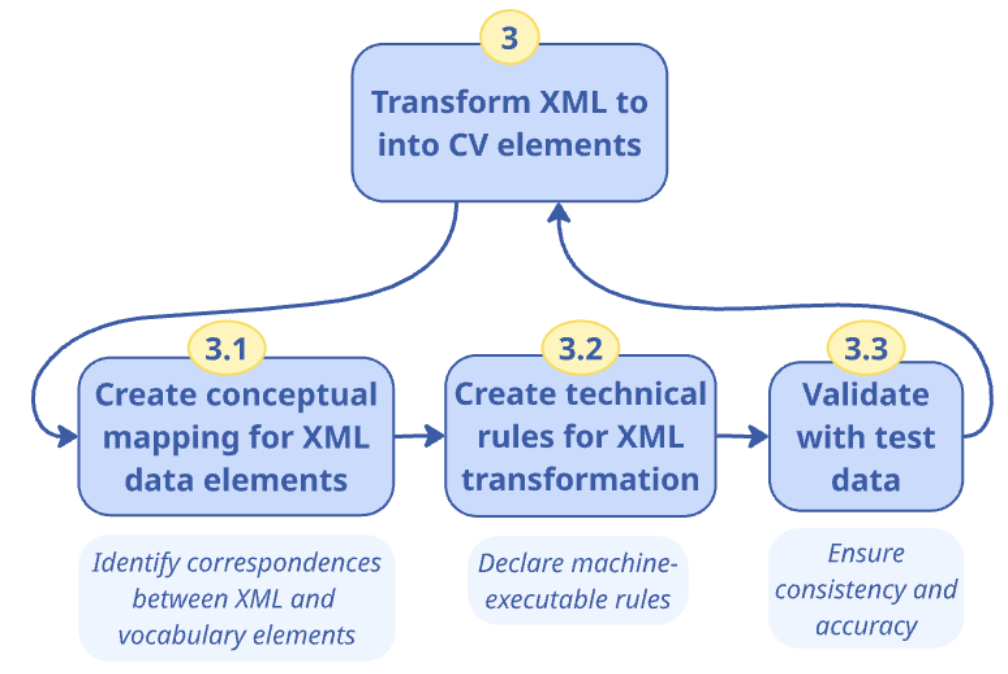

We differentiate between use cases focussed on the creation of new artefacts and those involving the mapping of existing artefacts to Core Vocabularies. For better clarity, we numbered the use cases and organised them into two diagrams, one for the primary scenarios (see Figure 2, below) and the other for depicting the additional ones. The former are addressed in the main part of this handbook, whereas the latter are listed in the Appendix and may be elaborated on at a later date if there is a demand from the community.

The use cases are written in white-box point of style oriented towards user goals following Cockburn’s classification [uc-book]. We will use the following template to describe the relevant use cases:

Use Case <UC>: Title of the use case |

Goal: A succinct sentence describing the goal of the use case |

Primary Actor: The primary actor or actors of this use case |

Actors: (Optional) Other actors involved in the use case |

Description: Short description of the use case providing relevant information for its understanding |

Example: An example to illustrate the application of this use case |

Note: (Optional) notes about this use case, especially related to its coverage in this handbook |

The use cases described below serve as a quick-access overview; complete concrete scenarios are introduced in the respective dedicated section’s “Description” section.

UC1: Create a new model from a Core Vocabulary

For creating new models, there are two business cases for illustration. In short:

-

UC1.1: Jean-Luc from the Maltese Chamber of Commerce who wants to create an XML schema (XSD) by using the Core Business Vocabulary (CBV), so that he reduces design time and ensures consistent, interoperable, and standards-compliant e-form validation across government systems.

-

UC1.2: Nora from the Norwegian Digitalisation Task Force who needs to provide guidance to the City of Oslo to create a new JSON-LD context based on the Core Public Services Vocabulary Application profile (CPSV-AP). This way, the City can create smart data models compliant with national and European interoperability standards and publish linked data for cross-system data exchange.

The main use case UC1 is summarised as follows, which is subsequently refined into two more specific cases.

Use Case UC1: Create a new information exchange data model |

Goal: Create a new standalone data schema that uses terms from Core Vocabularies. |

Primary Actors: Semantic Engineer, Software Engineer |

Description: The goal is to design and create a new data schema or information exchange data model that is not part of a more comprehensive semantic data specification, relying on terms from existing CVs as much as possible. |

Note: As this is a more generic use case it will be broken down into concrete use cases that focus on specific data formats. |

Use Case UC1.1: Create a new XSD schema |

Goal: Create a new standalone XSD schema that uses terms from Core Vocabularies. |

Primary Actors: Semantic Engineer, Software Engineer |

Description: The goal is to design and create a new XSD schema that is not part of a more comprehensive semantic data specification, relying on terms from existing CVs as much as possible. As an information exchange data model, an XSD schema can be used to create and validate XML data to be exchanged between information systems. |

Example: OOTS XML schema mappings [oots] |

Note: A detailed methodology to be applied for this use case will be provided in the Create a new XSD schema section. |

Use Case UC1.2: Create a new JSON-LD context definition |

Goal: Create a new standalone JSON-LD context definition that uses terms from Core Vocabularies. |

Primary Actors: Semantic Engineer, Software Engineer |

Description: The goal is to design and create a new JSON-LD context definition that is not part of a more comprehensive semantic data specification, relying on terms from existing CVs as much as possible. As an information exchange data model, a JSON-LD context definition can be integrated in describing data, building APIs, and other operations involved in information exchange. |

Example: Core Person Vocabulary [cpv-json-ld], Core Business Vocabulary [cbv-json-ld] |

Note: A detailed methodology to be applied for use cases will be provided in the Create a new JSON-LD context definition section. |

UC2: Map an existing model to a Core Vocabulary

For mapping vocabularies, there are two business cases for illustration. In short:

-

UC2.1: Sofía with the Department of Agriculture’s outreach division wants to map her existing conceptual model about outreach events that already uses the Core Business Vocabulary (CBV) and align it with Schema.org. This way, the event data can be distributed across the interoperable Europe and global web vocabularies, enabling a wider reach of accurate data exchange.

-

UC2.2.: Ella, working for the National Registry of Certified Legal Practitioners, wants to map her existing XML Schema Definition (XSD) to the Core Business Vocabulary (CBV). This enables her to transform the XML data into semantically enriched RDF such that it complies with European interoperability standards and supports linked data publication, and thereby enabling cross-system data exchange.

The main use case UC2 is summarised as follows, which is subsequently refined into two specific ones. They serve as a quick overview; concrete scenarios are introduced in the dedicated “Description” section.

Use Case UC2: Map an existing data model to a Core Vocabulary |

Goal: Create a mapping of an existing (information exchange) data model, to terms from Core Vocabularies. |

Primary Actors: Semantic Engineer |

Actors: Domain Expert, Software Engineer |

Description: The goal is to design and create a mapping of an ontology, vocabulary, or some kind of data schema or information exchange data model that is not part of a more comprehensive semantic data specification, to terms from CVs. Such a mapping can be done at a conceptual level, or formally, e.g., in the form of transformation rules, and most often will include both. |

Note: Since this is a more generic use case it will be broken down into concrete use cases that focus on specific data models and/or data formats. Some of those use cases will be described in detail below, while others will be included in the Appendix with the additional use cases. |

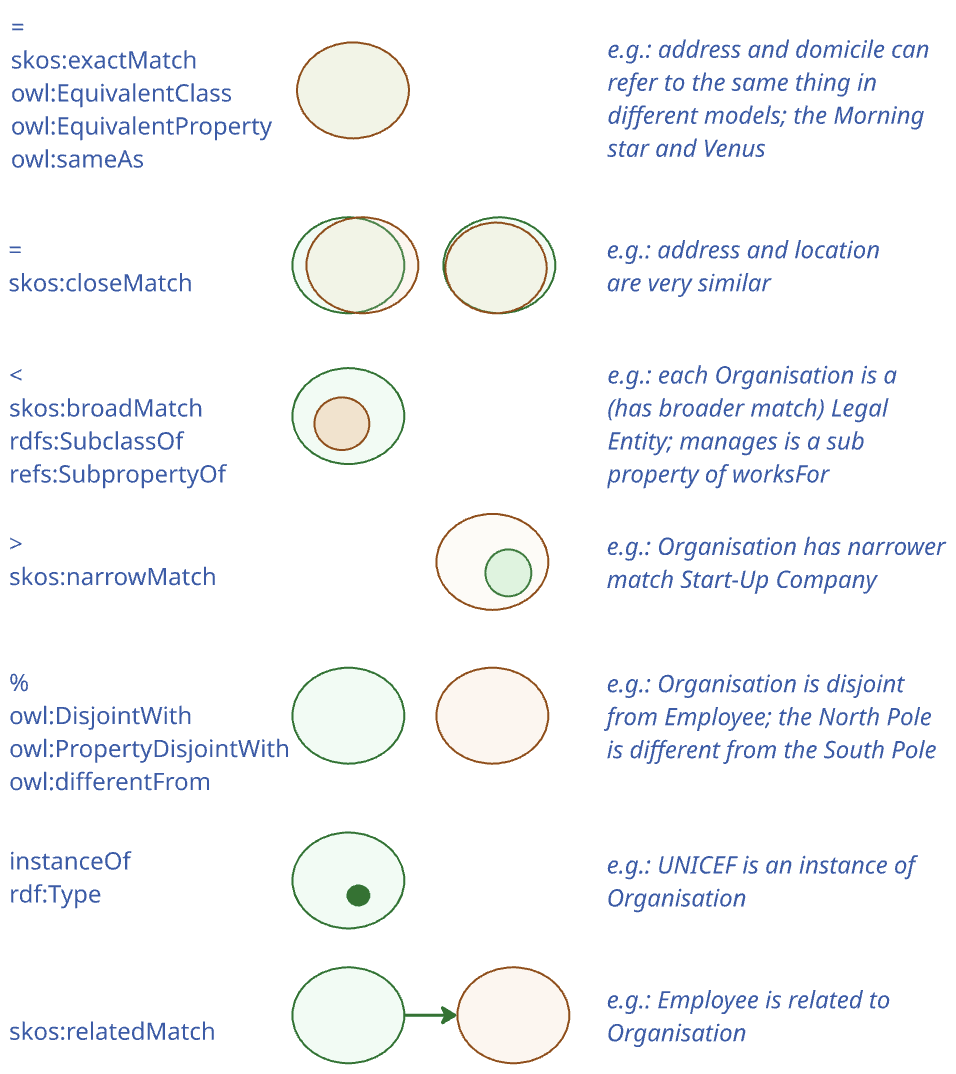

Use Case UC2.1: Map an existing Ontology to a Core Vocabulary |

Goal: Create a mapping between the terms of an existing ontology and the terms of Core Vocabularies. |

Primary Actors: Semantic Engineer |

Actors: Domain Expert, Business Analyst, Software Engineer |

Description: The goal is to create a formal mapping expressed in Semantic Web terminology (for example using rdfs:subClassOf, rdfs:subPropertyOf, owl:equivalentClass, owl:equivalentProperty, owl:sameAs properties), associating the terms in an existing ontology that defines relevant concepts in a given domain, to terms defined in one or more CVs. This activity is usually performed by a semantic engineer based on input received from domain experts and/or business analysts, who can assist with the creation of a conceptual mapping. The conceptual mapping associates the terms in an existing ontology, which defines relevant concepts within a specific domain, to terms defined in one or more SEMIC Core Vocabularies. The result of the formal mapping can be used later by software engineers to build information exchange systems. |

Example: Mapping Core Person to Schema.org [map-cp2org], Core Business to Schema.org [map-cb2org], etc. |

Note: A detailed methodology to be applied for this use case will be provided in the Map an existing Model section. |

Use Case UC2.2: Map an existing XSD schema to a Core Vocabulary |

Goal: Define the data transformation rules for the mapping of an XSD schema to terms from Core Vocabularies. Create a mapping of XML data that conforms to an existing XSD schema to an RDF representation that conforms to a Core Vocabulary for formal data transformation. |

Primary Actors: Semantic Engineer |

Actors: Domain Expert, Business Analyst, Software Engineer |

Description: The goal is to create a formal mapping using Semantic Web technologies (e.g. RML or other languages), to allow automated translation of XML data conforming to a certain XSD schema, to RDF data expressed in terms defined in one or more SEMIC Core Vocabularies. This use case required definitions of an Application Profile for a Core Vocabulary because the CV alone does not specify sufficient instantiation constraints to be precisely mappable. |

Example: ISA2core SAWSDL mapping [isa2-map] |

Note: A detailed methodology to be applied for this use case will be provided in the Map an existing XSD schema section. |

The additional use cases are described in the Appendix.

2. Creating a new data model from an existing Core Vocabulary

Technologies rise and decline in popularity, yet one of the red threads through the computational techniques over the decades is the management of structured data. Data needs to be stored, processed, acted upon, shared, integrated, presented, and more, all mediated by software. This also means that the structure of the data needs to be machine-readable far beyond the simple scanned hard-copy administrative forms or legal documents. Structured data mediates between entities in the real world and their representation in the software.

For instance, to represent the fact “govtDep#1 subcontracts comp#2”, we might have

-

a table with government departments that also contains a row with govtDep#1;

-

a table with companies and their identifying data including comp#2; and

-

a table with subcontracting relationships between instances, including (GovtDep#1,Comp#2).

A mechanism to capture the sort of structured and semi-structured data which may be stored and managed in the system is called a data model at the level of the technical implementation and conceptual data model or vocabulary as part of a semantic data specification when it is implementation-independent. Such models represent the entity types, such as GovernmentDepartment, Company, and its more generic type Organisation from our example, along with relationships between entity types, such as Subcontracting. Data models typically also implement business rules or constraints that apply to the particular organisation. For instance, one rule might state that each government department is permitted to subcontract at most 15 companies in country#3 whereas there may be no upper bound to subcontracting in country#4 and a prohibition on subcontracting (i.e., is permitted to subcontract at most 0) in country#5. These variations require different data models or application profiles, although they may use the same vocabulary.

This raises a number of questions:

-

What sort of data models are there?

-

Who develops those models?

-

How do they develop the models?

-

How can we ensure that those models are interoperable across applications and organisations, so that the data is interoperable as a consequence of adhering to those model specifications?

There are a number of languages to declare data models, which are normally developed by data modellers and database designers. While they may develop a data model from scratch, increasingly, they try to reuse existing specifications to speed up the development and foster interoperability. For use case 1, we address these questions from the perspective of creating new data models that reuse Core Vocabularies, either in full or in part, depending on the specific needs. In this chapter we focus on creating two types of data models: XSD schemas and JSON-LD contexts.

Each model type has its business case providing a rationale why one would want to do this, which is described in the respective “Use case description” sections. The respective “Guidelines” sections then walk the reader through the creation process (addressing mixed technical and non-technical audience), and finally the respective “Tutorial” sections target technical staff with a step-by-step example that implements the guideline.

2.1. Create a new XSD schema from a Core Vocabulary (UC1.1)

2.1.1. Use case description

We will introduce the motivation for the use case with a user story.

Imagine Jean-Luc, a semantic/software engineer assigned to develop a software application for processing online forms for the Maltese Chamber of Commerce. Among the format options of online forms are Office365, CSV, and XML that each have their pros and cons. Jean-Luc chooses XML, since many other forms are already being stored in XML format.

He is aware that XML files should have a schema declared first, which contains the specifications of the sort of elements and fields that are permitted to be used in the forms, such as the company’s registration number, name, and address. However, analysing the data requirements from scratch is not the preferred option. Moreover, there are Chambers of Commerce in other EU countries, which use forms to collect and update data. Perhaps he could reuse and adapt those schemas?

As Jean-Luc starts to search for existing models, called XML schemas in XSD format, he realises there are other places where businesses need to submit forms with company information, such as online registries and the tax office, that also may have XSD files available for reuse.

Unfortunately, not one of them made their schema available.

Given that such availability would be useful also at the EU level, he looks for guidance at the EU level. He finds The SEMIC Core Business Vocabulary, which has terminology he can reuse, not only saving time developing his own XSD schema but then also making it interoperable with all other XSD schemas that reuse the vocabulary.

User story: As a semantic engineer working in public sector IT, I want to create an XML schema (XSD) by reusing elements from the existing Core Business Vocabulary (CBV), so that I can reduce design time and ensure consistent, interoperable, and standards-compliant e-form validation across government systems.

The business case translates into the following use case specification, which is instantiated from the general UC1.1 description in the previous section:

Use Case UC 1.1: Create a new XSD schema |

Goal: Create a new XSD schema for e-forms from the Core Business Vocabulary. |

Primary Actors: Semantic Engineer |

Description: Design and create a new XSD schema for the Maltese chamber of commerce, reusing as much as possible from the Core Business Vocabulary. This new schema is to be used principally to validate e-forms, and possibly to exchange or collect data from other software systems. |

Having established the who, what, and why, the next step is how to accomplish this. An established guideline of good practice for XSD Schema development from a vocabulary is consulted to guide the process. This guideline is described in the next section.

2.1.2. Guidelines to create a new XSD schema

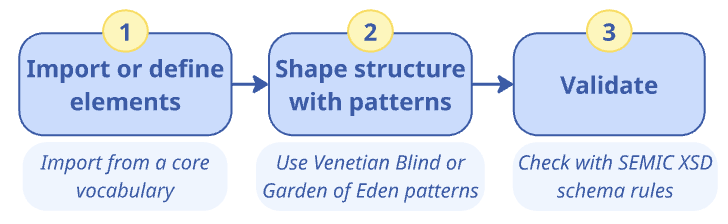

This section provides detailed instructions for addressing use case UC1.1. To create a new XSD schema, the following main steps need to be carried out:

-

Import or define elements

-

Shape structure with patterns

-

Validation

This is visualised in the following figure, together with key tasks and suggestions.

2.1.2.1. Phase 1: Import or define elements

When working with XML schemas, particularly in relation to semantic artefacts like ontologies or data shapes, managing the imports and namespaces are vital considerations that ensure clarity, reusability, and proper integration of various data models.

When a Core Vocabulary has defined an associated XSD schema, it is not only easy but also advisable to directly import this schema using the xsd:import statement. This enables seamless reuse and guarantees that any complex types or elements defined within the Core Vocabulary are integrated correctly and transparently within new schemas.

The imported elements are then employed in the definition of a specific document structure. For example, Core Vocabularies are based on DCTERMS that provides an XML schema, so Core Person could import the DCTERMS XML schema for the usage of a concept.

In cases where the Core Vocabulary does not provide an XSD schema, it is necessary to create the XML element definitions in the new XSD schema corresponding to the reused URIs. Crucially, these new elements must adhere to the namespace defined by the Core Vocabulary to maintain consistency; for the Core Vocabularies, they must be defined within the http://data.europa.eu/m8g/ namespace.

Furthermore, when integrating these elements into a new schema, it is essential to reflect the constraints from the Core Vocabulary’s data shape—specifically, which properties are optional and which are mandatory–within the XSD Schema element definitions.

|

Reusing elements and types from the Core Vocabulary improves interoperability and alignment with EU data standards, yet also imposes some limitations. Since reuse occurs at the syntactic level, element names and structures, including complex types, from the Core Vocabulary can be extended, but not easily restricted (for instance, limiting Organization to a single sub-organization would require creating a new complex type). These trade-offs between semantic interoperability and technical consistency are discussed in the Why Core Vocabularies section. |

2.1.2.2. Phase 2: Shape XML document structure

In designing XML schemas, the selection of a design pattern has implications for the reusability and extension of the schema. The Venetian Blind and Garden of Eden patterns stand out as preferable for their ability to allow complex types to be reused by different elements [dsg-ptr].

The Venetian Blind pattern is characterised by having a single global element that serves as the entry point for the XML document, from which all the elements can be reached. This pattern implies a certain directionality and starting point, analogous to choosing a primary class in an ontology that has direct relationships to other classes, and from which one can navigate to the rest of the classes.

Adopting Venetian Blind pattern reduces the variability in its application and deems the schema usable in specific scenarios by providing not only well-defined elements, but also a rigid and predictable structure.

On the other hand, the Garden of Eden pattern allows for multiple global elements, providing various entry points into the XML document. This pattern accommodates ontologies where no single class is inherently central, mirroring the flexibility of graph representations in ontologies that do not have a strict hierarchical starting point.

Adopting the Garden of Eden pattern provides a less constrained approach, enabling users to represent information starting from different elements that may hold significance in different contexts. This approach has been adopted by standardisation initiatives such as NIEM [niem] and UBL [ubl], which recommend such flexibility for broader applicability and ease of information representation.

However, the Garden of Eden pattern does not lead to a schema that can be used in final application scenarios, because it does not ensure a single stable document structure but leaves the possibility for variations. This schema pattern requires an additional composition specification. For example, if it is used in a SOAP API [soap-api], the developers can decide on using multiple starting points to facilitate exchange of granular messages specific per API endpoint. This way the XSD schema remains reusable for different API endpoints and even API implementations.

Overall, the choice between these patterns should be informed by the intended use of the schema, the level of abstraction of the ontology it represents, and the needs of the end-users, aiming to strike a balance between structure and flexibility.

We consider the Garden of Eden pattern suitable for designing XSD schemas at the level of core or domain semantic data specifications, and the Venetian Blind pattern suitable for XSD schemas at the level of specific data exchange or API.

|

Recommendation for choosing the appropriate pattern: The Venetian Blind Pattern suits an API where a central entity is the main entry point, offering a structured schema for defined use cases. The Garden of Eden Pattern is better for Core or Domain Data Specifications, where multiple entry points provide flexibility for general-purpose data models. |

Complex types should be defined, if deemed necessary, only after importing or defining the basic elements and application of patterns. Complex types are deemed complex when they have multiple properties, be they attributes or relationships.

Finally, complete the XSD schema by adding annotations and documentation, which improve understanding of the schema’s content both for external users and oneself at a later date, as well as communicating the purpose so that the schema will be deployed as intended.

|

Add annotations and documentation using the |

2.1.2.3. Phase 3: Validation

The schema should be validated with at least one sample XML document, to verify that it is syntactically correct, semantically as intended, and that it has adequate coverage. SEMIC XSD schemas adhere to best practices and the resulting XSD schemas should also adhere to best practices, the SEMIC Style Guide, validation rules to maintain consistency, clarity, and reusability across schemas. These rules include naming conventions, documentation standards, and structural rules.

Having created the XML representation from the Core Vocabulary, we thus created a binding between the technical and semantic layer for the interoperability of the data. Either may possibly evolve over time and changes initiated from either direction should be consulted with the other, and may require re-validation of the binding. Strategies to avoid problematic divergence are to be put in place.

2.1.3. Tutorial: Create an XSD schema using the Core Business Vocabulary

Creating an XSD schema using the Core Business Vocabulary (CBV) involves defining the structure, data types, and relationships for the elements of the CBV, ensuring interoperability between systems. This tutorial follows the guidelines outlined for Use Case UC1.1 "Create a New XSD Schema", showing how to design and create an XSD schema that integrates terms from the Core Business Vocabulary (CBV). This step-by-step guide focuses on the essential phases of the schema creation process, ensuring that the elements from CBV are correctly imported, the document structure is shaped properly, and all constraints are applied.

To recap the process, we first will import or define elements, shape the structure with patterns, define complex types, and finalise the schema.

2.1.3.1. Phase 1: Import or define elements

Managing Imports and Namespaces

In XML Schema development, managing imports and namespaces is crucial to ensure that elements from external vocabularies are reused and integrated consistently. This step ensures that the schema obtains and maintains semantics, will be reusable, and is correctly aligned with the Core Business Vocabulary (CBV).

For example, CBV comes with its own XSD schema, the following import statement imports all definitions related to CBV elements into your XSD schema (explained afterwards):

<?xml version="1.0" encoding="UTF-8"?>

<xs:schema

xmlns:xs="http://www.w3.org/2001/XMLSchema"

targetNamespace="http://data.europa.eu/m8g/xsd"

xmlns="http://data.europa.eu/m8g/xsd"

xmlns:dct="http://purl.org/dc/terms/"

xmlns:sawsdl="http://www.w3.org/ns/sawsdl"

elementFormDefault="qualified"

attributeFormDefault="unqualified"

version="2.2.0">

<!-- Importing Core Business Vocabulary schema -->

<xs:import

namespace="http://data.europa.eu/m8g/"

schemaLocation="https://raw.githubusercontent.com/SEMICeu/XML-schema/refs/heads/main/models/CoreVoc_Business/CoreVoc_Business.xsd"/>

</xs:schema>The key components are:

-

<xs:import>: The element that imports the CBV schema to make its terms available in your schema. -

namespace="http://data.europa.eu/m8g/": Defines the namespace of the CBV. -

schemaLocation="https://raw.githubusercontent.com/SEMICeu/XML-schema/main/models/CoreVoc_Business/CoreVoc_Business.xsd": Points to the location of the CBV schema file on the Web.

Define elements

If the XSD schema of the CV does not suffice, in that you need additional elements beyond the XSD schema, then you have to define those yourself in the XSD schema you are developing. This might be an element from the CV associated with the XSD, or possibly elements from another CV or semantic artefact.

These new elements need to adhere to the Core Vocabulary’s namespace to maintain consistency.

For example, the LegalEntity element could be defined as follows if no XSD is provided for it:

<xs:element name="LegalEntity" type="LegalEntityType"/>Make sure you declare the correct namespace (e.g., http://example.com/) for all these custom elements.

2.1.3.2. Phase 2: Shape the XML document structure with patterns

At this phase, we focus on structuring the XML document using appropriate XML Schema Design Patterns [dsg-ptr]. The Venetian Blind and Garden of Eden patterns are two methods for organizing the schema.

Venetian Blind Pattern

In the Venetian Blind pattern, there is one primary global element, and all other elements are nested inside it. This approach is ideal when a central entity, such as LegalEntity, serves as the entry point, as seen in CBV’s XSD. This pattern fits well with API design, where you typically request information about a central concept (such as LegalEntity), and the response includes nested properties, including LegalName and RegisteredAddress, which are all organised under the main entity.

Here’s an example, where LegalEntity serves as the main entry point:

<xs:schema

targetNamespace="http://data.europa.eu/m8g/xsd"

xmlns="http://data.europa.eu/m8g/xsd"

xmlns:xs="http://www.w3.org/2001/XMLSchema"

xmlns:dct="http://purl.org/dc/terms/"

xmlns:sawsdl="http://www.w3.org/ns/sawsdl">

<xs:element name="LegalEntity" type="LegalEntityType"/>

<xs:element name="LegalName" type="TextType"/>

<xs:element name="RegisteredAddress" type="AddressType"/>

<!-- Other elements -->

</xs:schema>In this example:

-

LegalEntityis the global entry point. -

It uses

LegalEntityType, which contains various properties such asLegalNameandRegisteredAddress.

Garden of Eden Pattern

In the Garden of Eden pattern, there are multiple entry points in the XML document. This is more flexible and is suitable when no central class is inherently the main starting point. The elements that are declared directly under <xs:schema> qualify as such entry points. In CBV these include LegalEntity, Organization etc., whereas nested elements, such as RegisteredAddress or ContactPoint, are defined inside those complex types and cannot start a document on their own.

<xs:schema xmlns:xs="http://www.w3.org/2001/XMLSchema">

<xs:element name="LegalEntity" type="LegalEntityType"/>

<xs:element name="Organization" type="OrganizationType"/>

</xs:schema>Define Complex Types

After importing or defining the basic elements and structuring your XML document with patterns, the next step in creating an XSD schema is to define complex types. Complex types are used to represent business entities that contain multiple properties or relationships. For CBV, these types often model entities like LegalEntity or Organization, which have both simple and complex elements. For example, the LegalEntityType and OrganizationType, as follows.

An implemented LegalEntity might contain multiple child elements, such as LegalName modelled as a simple string, RegisteredAddress (also a complex type), and other related elements. Here’s how LegalEntityType is defined in the XSD schema:

<xs:complexType name="LegalEntityType"

sawsdl:modelReference="http://www.w3.org/ns/legal#LegalEntity">

<xs:sequence>

<xs:element

ref="LegalName"

minOccurs="0"

maxOccurs="unbounded"

sawsdl:modelReference="http://www.w3.org/ns/legal#legalName"/>

<xs:element

ref="RegisteredAddress"

minOccurs="0"

maxOccurs="unbounded"

sawsdl:modelReference="http://data.europa.eu/m8g/registeredAddress"/>

<!-- More elements as needed -->

</xs:sequence>

</xs:complexType>|

The sawsdl:modelReference annotation is used to link the element to an external concept, providing semantic context by associating the element with a specific vocabulary or ontology. |

Similar to the LegalEntityType complex type, the BusinessAgentType defines a concept with multiple properties and relationships. However, for BusinessAgentType in the XSD schema, we define it as a complex type that contains hierarchical relationships, such as HeadOf and MemberOf.

<xs:complexType name="BusinessAgentType"

sawsdl:modelReference="http://xmlns.com/foaf/0.1/Agent">

<xs:sequence>

<xs:element

ref="HeadOf"

minOccurs="0"

maxOccurs="unbounded"

sawsdl:modelReference="http://www.w3.org/ns/org#headOf">

</xs:element>

<xs:element

ref="MemberOf"

minOccurs="0"

maxOccurs="unbounded"

sawsdl:modelReference="http://www.w3.org/ns/org#memberOf">

</xs:element>

</xs:sequence>

</xs:complexType>It’s important to observe that in this context, LegalEntityType is defined as an extension of FormalOrganizationType (which, in turn, extends OrganizationType), declared using an <xs:extension base="…"> element, as shown in the following snippet.

<!-- LegalEntityType -->

<xs:element name="LegalEntity" type="LegalEntityType"/>

<xs:complexType name="LegalEntityType"

sawsdl:modelReference="http://www.w3.org/ns/legal#LegalEntity">

<xs:complexContent>

<xs:extension base="FormalOrganizationType"/>

</xs:complexContent>

</xs:complexType>Finalise the XSD schema

Adding annotations and documentation to each complex type and element helps to clarify their purpose and improve the readability of the schema. For instance:

<xs:complexType name="BusinessAgentType"

sawsdl:modelReference="http://xmlns.com/foaf/0.1/Agent">

<xs:annotation>

<xs:documentation xml:lang="en">

Entity that is able to carry out action.

</xs:documentation>

</xs:annotation>

</xs:complexType>2.1.3.3. Phase 3: Validation and best practices

Finally, test your new schema by validating sample XML documents using XML validation tools (e.g., XMLValidation) to ensure that the schema is syntactically correct and works as expected. The Core Business Vocabulary (CBV) follows several best practices and validation rules to maintain consistency, clarity, and reusability across schemas. These rules include naming conventions, documentation standards, and structural rules.

Schematron Validation Rules

To ensure schema compliance, the Schematron rules provide automated checks. These rules cover key aspects such as type definitions, element declarations, metadata, and more. The detailed list of rules can be found here.

Running the Validation

You can execute the rules using the provided build.xml file, which leverages Apache Ant. The process validates the schema against the Schematron rules and generates HTML reports for easy inspection.

2.2. Create a new JSON-LD context definition from a Core Vocabulary (UC1.2)

2.2.1. Use case description

Public administrations often need to share information about their services with other organisations and the public. To do this effectively, the data must be easy to understand and work seamlessly across different systems. However, public services are becoming more complex, which means we need to capture more details, concepts, and relationships to handle various use cases. This was also the case in Norway, which came to a fruitful solution. Let us imagine how that might have happened in the following scenario as motivation for the use case, which is followed by a user story that summarises it.

Consider Nora, who works for the DiTFor, the Norwegian Digitalisation Task Force. Although Norway is not a member of the EU, it is closely associated with the EU through its membership in the European Economic Area (EEA) and the Schengen Area. As part of the EEA, Norway participates in the EU’s internal market and adopts many EU laws and regulations. Therefore there is a lot of cross-border collaboration with other member states and there is a number of publicly available resources for use and reuse to facilitate interoperable exchange, including a vocabulary that could be used for their generic framework for their digitalisation of administration of public services: the Core Public Service Vocabulary Application Profile (CPSV-AP). They extended it to fit better with their context and needs, such as having introduced RequiredEvidence, which provides a way to explicitly define the documentation or credentials needed to access a service, such as proof of address for a library card. The extension was published publicly as CPSV-AP-NO.

Happy with the outcome, Nora emailed the municipalities so that each city and town would be able to upgrade their system in the same way with CPSV-AP-NO, and so that DiTFor could still collect and integrate the data at the national level.

Meanwhile, the City of Oslo’s transportation services department had just learned of smart data models to manage the data about public road network maintenance, such as dataModel.Transportation, and their helpdesk for reporting road maintenance issues. That data, stored according to the smart data model, could then also be used for the public transport network management organisation to work towards the aim to make Oslo a Smart City. A popular language to specify smart data models is a JSON-LD context, because it helps structure the data so it can be easily shared and understood by different systems.

The City of Oslo received DiTFor’s notification about the CPSV-AP-NO: their data models needed to comply with the CPSV-AP-NO for the purposes of effective use and interoperability. Looking into the details, they realised that it should be possible to utilise CPSV-AP-NO for their smart data model in JSON-LD and, in fact, would save them time looking for other vocabularies and adapting those. The question became one of how to do it, and so they replied to Nora’s email inquiring whether she could also provide instructions for using the Application Profile.

User Story: As a software engineer at a public sector department, I want to create a new JSON-LD context based on the Core Public Services Vocabulary Application profile (CPSV-AP), so that I can create interoperable smart data models that comply with national and European interoperability standards and support linked data publication to facilitate cross-system data exchange.

This business case translates into the following use case specification, which is instantiated from the general UC1.2 description in the previous section.

Use Case UC 1.2: Create a new JSON-LD context |

Goal: Create a new JSON-LD context that links to the CPSV-AP. |

Primary Actors: Semantic Engineer and Software Engineer |

Description: : Design and implement a new JSON-LD context definition for the transportation services department of Oslo that adheres to, and takes as input, the nationally relevant vocabulary of the CPSV-AP (i.e., CPSV-AP-NO). Carry out the task in a systematic way following an agreed-upon guideline.

|

Having established the who, what, and why, the next step is how to accomplish this. The semantic engineer specifies the guidelines for JSON-LD context development from a vocabulary, which makes it easier for the software engineer to implement it. The guideline is described in the next section.

2.2.2. Guidelines to create a new JSON-LD context definition

This section provides guidelines for addressing use case UC1.2.

JSON-LD is a W3C Recommendation for serialising Linked Data, combining the simplicity, power, and Web ubiquity of JSON with the concepts of Linked Data. Creating JSON-LD context definitions facilitates this synergy. This ensures that when data is shared or integrated across systems, it maintains its meaning and can be understood in the same way across different contexts. This guide describes how to create new JSON-LD contexts for existing Core Vocabularies.

The three key phases are:

-

Import or define elements

-

Shape structure

-

Review and validate

This is visualised in the following figure, together with key tasks and suggestions.

2.2.2.1. Phase 1: Import or define elements

When a Core Vocabulary has an associated JSON-LD context already defined, it is not only easy, but also advisable to directly import this context using the @import keyword. This enables seamless reuse and guarantees that any complex types or elements defined within the vocabulary are integrated correctly and transparently within new schemas.

In cases where the Core Vocabulary does not provide an JSON-LD context, it is necessary to create the corresponding field element definitions for the reused URIs, in three steps:

-

Gather all the terms from the selected Core Vocabulary that need to be included in the JSON-LD context.

-

Decide the desired structure of the JSON-LD file, by defining the corresponding keys, such as

Person.givenName. These new fields must adhere to the naming defined by the selected Core Vocabulary to maintain consistency. -

Assign URIs to keys. Each term in the JSON-LD context must be associated with a URI from an ontology that defines its meaning in a globally unambiguous way. Associate the URIs established in Core Vocabularies to JSON keys using the same CV terms.

The ones that are imported by the Core Vocabularies, shall be used as originally defined.

Example: importing an existing context.

{

"@context": {

"@import": "https://example.org/cpsv-ap.jsonld"

}

}2.2.2.2. Phase 2: Shape structure

Main shaping of the structure

Start with defining the structure of the context by relating class terms with property terms and then, if necessary, property terms with other classes.

Commence by creating a JSON structure that starts with a @context field. This field will contain mappings from one’s own vocabulary terms to other’s respective URIs. Continue by defining fields for classes and subfields for their properties.

If the JSON-LD context is developed with the aim of being used directly in an exchange specific to an application scenario, then aim to establish a complete tree structure that starts with a single root class. To do so, specify precise @type references linking to the specific class.

If the aim of the developed JSON-LD context is rather to ensure semantic correspondences, without any structural constraints, which is the case for core or domain semantic data specification, then definitions of structures specific to each entity type and its properties suffice, using only loose references to other objects.

Example: defining a class with properties.

{

"@context": {

"Service": "http://example.org/Service",

"Service.name": "http://purl.org/dc/terms/title"

}

}Design note: Flat vs scoped context disambiguation

When defining properties in a JSON-LD context, one has to consider how attribute names are disambiguated across different classes. Two main approaches can be adopted:

-

Flat context disambiguation. In this approach, and demonstrated in the previous example, each property is declared globally and identified by a fully qualified key (for example,

Service.name). This guarantees that each attribute is uniquely associated with its URI, even when the same property name appears in different classes. The flat approach is straightforward to generate automatically and ensures full disambiguation, which is why it is adopted by the SEMIC toolchain. However, it can result in less readable JSON structures, because the prefixed property names may appear verbose or repetitive. -

Scoped context disambiguation. A context can be defined per class, allowing property names such as name or description to be reused within each class-specific scope. This produces cleaner and more human-readable JSON but can be more complex to design and maintain. Scoped contexts often require explicit

@typedeclarations or additional range indicators to ensure that the correct mappings are applied during JSON-LD expansion.

The choice between flat or scoped contexts should be motivated by the expected use of the data. When contexts are generated automatically or used for large-scale data exchange, the flat approach offers simplicity and reliability. When contexts are manually authored or designed for human-facing APIs, scoped contexts may be preferable for improved readability, provided that their additional complexity is manageable.

Improvements to the structure

To meet wishes from API consumers, one may use aliasing of keywords, where a JSON-LD context element is given a more easily recognisable string.

One can also extend the context by reusing terms from Core Vocabularies, which can be achieved using the @import keyword if included as a whole. Also, single elements can be added, such as additional properties and mapping those to other vocabulary elements of other vocabularies.

2.2.2.3. Phase 3: Review and validate

First, one should review the created context against any prior requirements that may have been described: is all prospected content indeed included in the context?

Second, the syntax should be verified with a JSON-LD validator, such as JSON-LD Playground to ensure that the context is free of errors and all URLs used are operational.

Example: an error in the URL.

{

"@context": [

{ "@import": "https://invalid-url/cpsv-ap.jsonld" }

]

}2.2.3. Tutorial: Create a JSON-LD context from the CPSV-AP Core Vocabulary

This tutorial addresses the use case UC1.2, and will show how to create a JSON-LD context for an Application Profile that extends CPSV-AP with new concepts that are defined by reusing concepts from the Core Business Vocabulary (CBV), following ideas from CPSV-AP-NO.

2.2.3.1. Phase 1: Import or define elements

Since CPSV-AP provides an existing JSON-LD context, we can import it in our own JSON-LD context using the @import statement. For example, in case of CPSV-AP version 3.2.0, the context can be directly reused like this:

{

"@context": {

"@import": "https://raw.githubusercontent.com/SEMICeu/CPSV-AP/master/releases/3.2.0/context/cpsv-ap.jsonld"

}

}If a context does not exist, define the elements explicitly. For example, CPSV-AP uses specific terms such as PublicService and ContactPoint. These terms must be mapped to URIs.

{

"@context": {

"PublicService": "http://purl.org/vocab/cpsv#PublicService",

"ContactPoint": "http://data.europa.eu/m8g/ContactPoint"

}

}If a context needs to be extended, define the new elements explicitly. For example, if we need new terms (classes), such as Service and RequiredEvidence, which are not in CPSV-AP these terms must be mapped to URIs (the examples are inspired by CPSV-AP-NO):

{

"@context": {

"Service": "http://example.com/cpsvap#Service",

"RequiredEvidence": "http://example.com/cpsvap#RequiredEvidence"

}

}Once you’ve imported or defined the relevant terms, you need to structure your JSON-LD context to reflect the relationships between the classes and their properties. This allows you to describe public services and their details in a standardised and machine-readable format.

Let’s look at an example where we define a Service and some of its key properties, such as contactPoint, description, name and hasRequiredEvidence:

{

"@context": {

"@import": "https://raw.githubusercontent.com/SEMICeu/CPSV-AP/master/releases/3.2.0/context/cpsv-ap.jsonld",

"Service": "http://example.com/cpsvap#Service",

"Service.hasRequiredEvidence": {

"@id": "http://example.com/cpsvap#RequiredEvidence",

"@container": "@set"

},

"Service.description": {

"@id": "http://purl.org/dc/terms/description",

"@type": "http://www.w3.org/1999/02/22-rdf-syntax-ns#langString",

"@container": "@set"

},

"Service.name": {

"@id": "http://purl.org/dc/terms/title",

"@type": "http://www.w3.org/1999/02/22-rdf-syntax-ns#langString",

"@container": "@set"

},

"Service.contactPoint": {

"@id": "http://data.europa.eu/m8g/contactPoint",

"@type": "@id",

"@container": "@set"

}

}

}Explanation of JSON-LD keywords used:

-

@context: Defines the mapping between terms (e.g., PublicService) and their corresponding IRIs. -

@container: Specifies how values are structured. For instance,-

@set: Explicitly defines a property as an array of values. It ensures that even if the data includes just one value, it will still be treated as an array by JSON-LD processors. This makes post-processing of JSON-LD documents easier as the data is always in array form, even if the array only contains a single value

-

-

@id: Provides the unique identifier (IRI) for a term or property. -

@type: Specifies the type of a value, which is commonly used for linking to classes or data types. -

@import: Imports another JSON-LD context, allowing reuse of its terms.

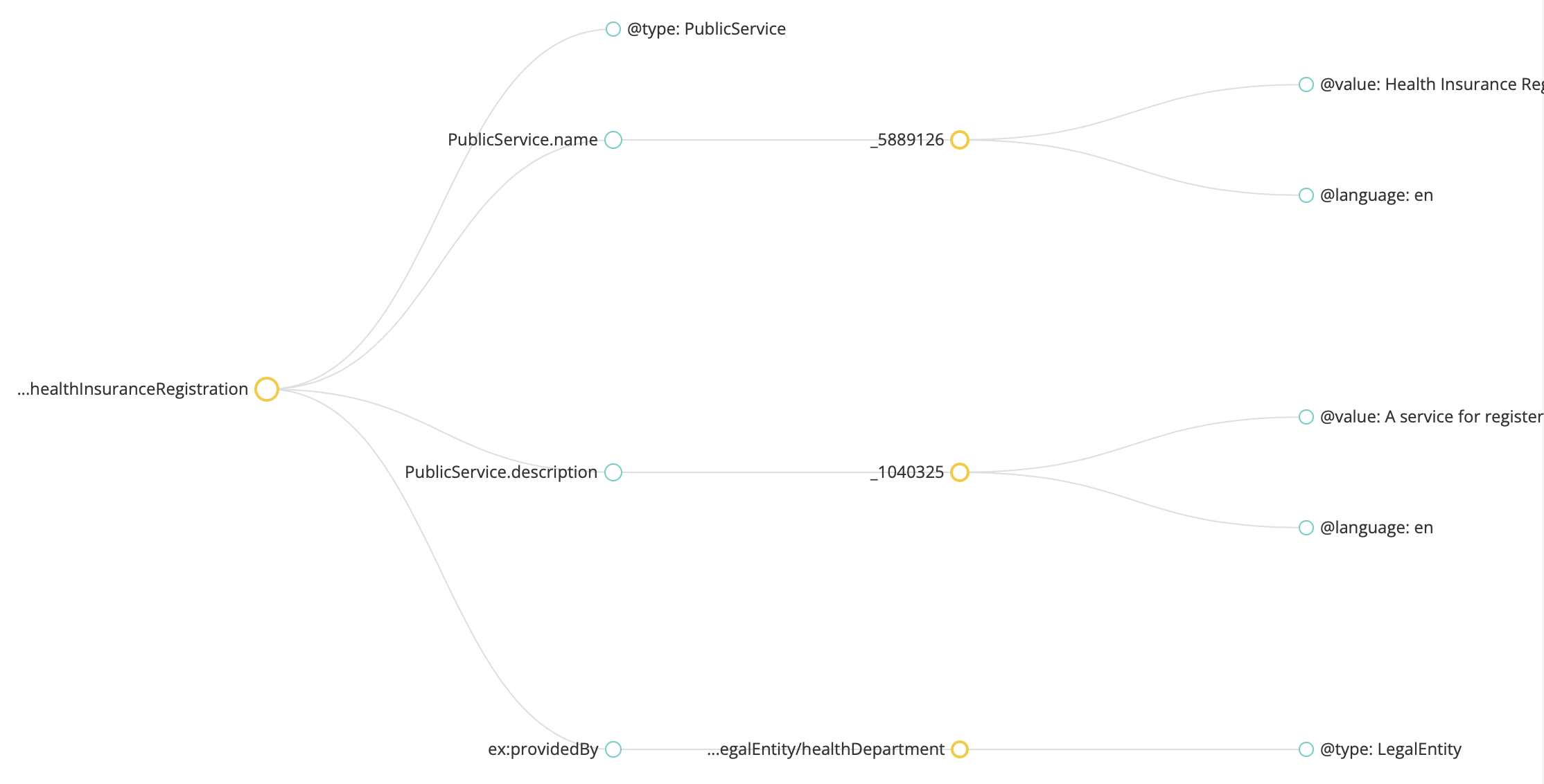

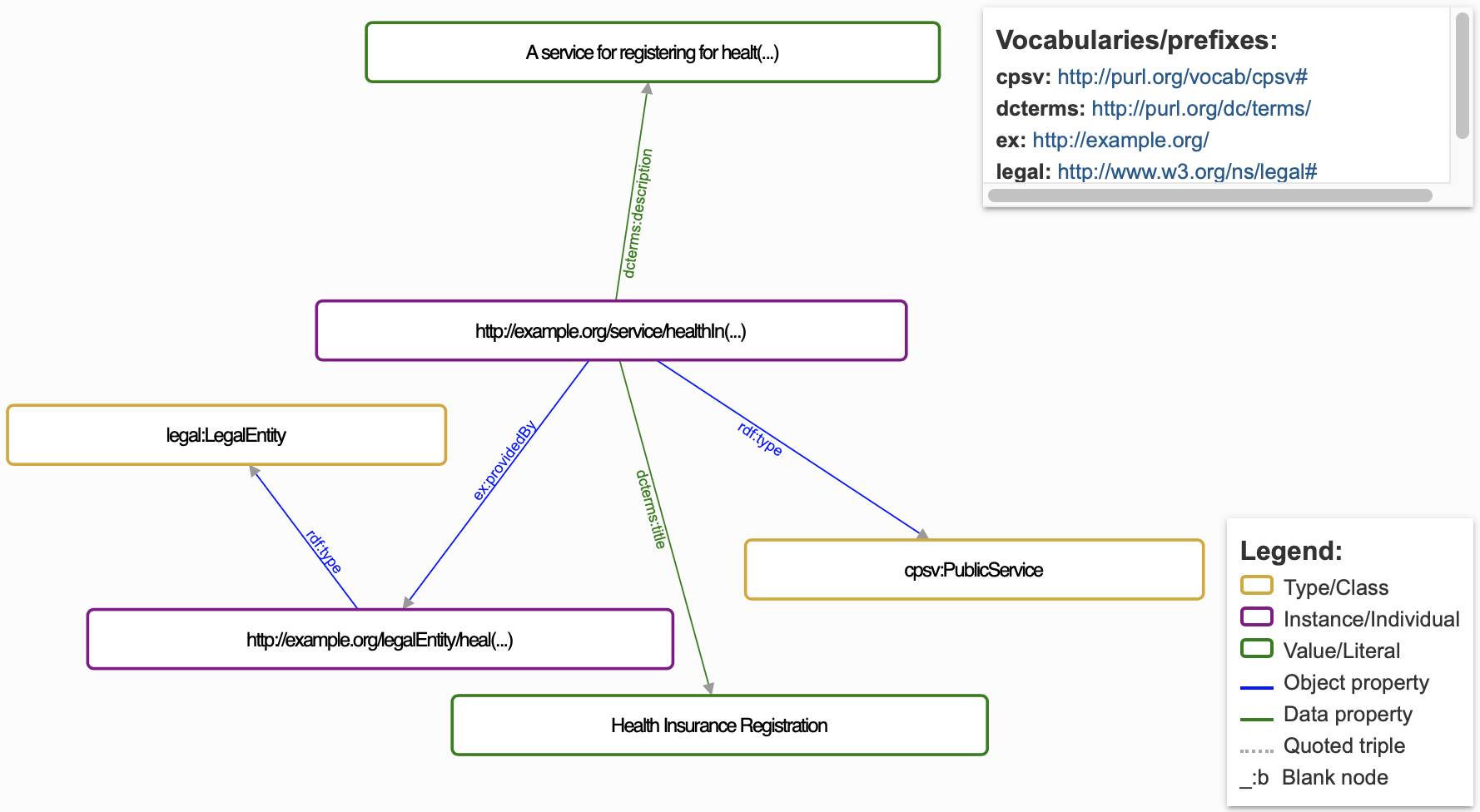

Example of a simple service instance

After defining the context and structure, you can now describe an actual Service instance by referencing the terms you defined earlier.

Example scenario

Let’s assume a public administration offers a service called "Health Insurance Registration". This service allows citizens to register for health insurance, which requires certain documents (evidence) to complete the process. Citizens might need to contact the administration for guidance, and the service details should be structured in a way that makes it easy to share and integrate across systems.

To illustrate this, we need to create a JSON-LD context representation of this service, highlighting

-

The required evidence for registration (e.g., proof of address);

-

The service’s name and description for clarity;

-

Contact information for users who may need assistance.

Try this in the JSON-LD Playground here and then check your solution with the example below.

{

"@context": [

"https://raw.githubusercontent.com/SEMICeu/CPSV-AP/master/releases/3.2.0/context/cpsv-ap.jsonld"

],

"@id": "http://example.org/service/healthInsuranceRegistration",

"@type": "PublicService",

"PublicService.name": {

"@value": "Health Insurance Registration",

"@language": "en"

},

"PublicService.description": {

"@value": "A service for registering for health insurance.",

"@language": "en"

}

}Aliasing keywords for API compatibility (REST API example)

When working with REST APIs, it is often beneficial to alias certain JSON-LD keywords for simpler or more consistent representations in client applications. For example, you might alias JSON-LD’s @id to url and @type to type to make the data more intuitive for API consumers, especially when working with legacy systems or client-side frameworks that use specific naming conventions.

Example of aliasing keywords

{

"@context": {

"url": "@id",

"type": "@type",

"Service": "http://purl.org/vocab/cpsv#PublicService",

"Service.name": "http://purl.org/dc/terms/title",

"Service.description": "http://purl.org/dc/terms/description"

},

"url": "http://example.com/service/healthInsuranceRegistration",

"type": "Service",

"Service.name": "Health Insurance Registration",

"Service.description": "A service for registering for health insurance."

}In this example, url is an alias for @id and type is an alias for @type.

By aliasing these terms, the API responses are simplified and more familiar to the developers interacting with the service, especially if they are accustomed to a different JSON structure.

Extend the context by reusing terms from Core Vocabularies

To highlight the reuse of terms from existing CVs, we can import the Core Business Vocabulary (CBV) context alongside the CPSV-AP context to gain access to business-related terms. This step ensures that you can use the additional terms from CBV, such as LegalEntity, Organisation, and ContactPoint, to enrich your Service descriptions.

{

"@context": [

{

"@import": "https://raw.githubusercontent.com/SEMICeu/CPSV-AP/master/releases/3.2.0/context/cpsv-ap.jsonld"

},

{

"@import": "https://raw.githubusercontent.com/SEMICeu/Core-Business-Vocabulary/master/releases/2.2.0/context/core-business-ap.jsonld"

}

]

}Define additional properties from the Core Business Vocabulary

Add CBV terms to enhance the description of the Service entity by reusing existing concepts such as LegalEntity, which helps to specify who provided the service.

{

"@context": {

"Service.providedBy": {

"@id": "http://example.com/legal#providedBy",

"@type": "http://example.com/legal#LegalEntity"

},

"LegalEntity": "http://www.w3.org/ns/legal#LegalEntity"

}

}Map extended properties in a service instance

Use the extended properties to describe more aspects of Service instances. For example:

{

"@context": [